24 Oct 2025 (updated) – Recently, the ITER project and the Broader Approach’s QST network teams carried out a series of high-speed data transfer tests between the ITER Marseille Data Transfer Node (DTN) and the JA REC facility in Japan, from mid-August to early September 2025. The tests were supported by members of the Asia-Pacific Europe Ring (AER) partner network (aer-network.net), whose multiple international 100 Gbps links connect Europe, Asia, and the Pacific in a resilient ring topology.

The objective was to validate throughput close to the 100 Gbps link capacity, test sustained performance, evaluate multi-path capabilities, and identify bottlenecks—critical preparation ahead of ITER’s operational phase in 2034, when petabytes of experimental data will need to be transferred globally in near real-time.

The tests built upon the important infrastructure improvements realized over the past few years. REC, via SINET, increased its connectivity to Amsterdam, Europe to 100 Gbps. At the same time, ITER constructed its data dissemination network node in Marseille, opening the way to external connectivity of 100 Gbps via their providers RENATER and GÉANT. Therefore, the conditions were ripe to conduct a trans-continental 100 Gbps end-to-end test. Specifically for this test, the capacity has been doubled with a second independent 100 Gbps Singapore link, which could be used to split the load or to serve as a reserve capacity in the case of primary link failure.

In this test-run, multiple protocols were evaluated:

- ITER.Sync, a parallel rsync-based tool

- Fast Data Transfer (FDT)

- Massively Multi-Connection File Transfer Protocol (MMCFTP)

These tests consisted of two coordinated campaigns: one led by ITER using the ITER.Sync parallel rsync tool, and another led by QST using MMCFTP and FDT. Both aimed to validate end-to-end 100 Gbps transfers across the AER network under realistic operational conditions. The combination of these campaigns provided complementary insights — ITER.Sync representing practical, multi-file transfers for operational workflows, and MMCFTP/FDT demonstrating theoretical link saturation in controlled scenarios.

The tools demonstrated comparable results, ranging from 55 to 90 Gbps sustained, depending on network utilization strategy (collaborative versus exclusive), data set assembly approach, or simply due to daily variations in network load. With MMCFTP on tailored data sets in exclusive mode, the speed was peaking up to 91 Gbps for useful payload, demonstrating full 100 Gbps channel utilization.

In the current DT-1 scenarios, the pulses of ITER could generate, on average, 20 TB of data per hour of operation. It was demonstrated that these data could be transferred to the remote site within the hour with a good margin, which would be required for non-stop experiment campaigns.

For long transfers, routine throughput of 500 TB per day was demonstrated using the ITER.Sync tool, practically limited by the currently available data transfer nodes. Separate runs indicate that with production-level nodes, performance would scale linearly. In one test, multiple 176 TB file sets — comprising 327,000 individual files — were transferred in just under eight hours.

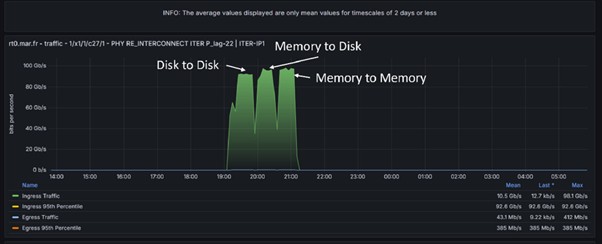

Finally, transfers via two physical paths in parallel were also demonstrated, using either independent pairs of sender-receiver, or employing lower-level balancing via ECMP protocol. This feature would be essential in real-life operating scenarios, both for resilience to failures and from the point of view of collaborative use of scientific backbone networks. Figure 1 shows the test timeline with transfers approaching the full 100 Gbps bandwidth.

Figure 2 captured MMCFTP speed test results using different data transfer modes: Disk to Disk (D2D), Memory to Disk (M2D) and Memory to Memory (M2M), aiming to demonstrate full bandwidth utilization.

“From the network side, the results of the maximum speed test were perhaps the most impressive considering the fluctuations that were observed in the circuits. In this test, we used two paths simultaneously and achieved results approaching the bandwidth limit, so it was important that we were able to use the Singapore route. The improved fault tolerance achieved by connecting ITER and QST, via a redundant route was an important achievement.” – reflected by Senior Researcher, Kenjiro Yamanaka of Advanced ICT Center, National Institute of Informatics (NII).

“The tests were conducted using snapshots of real ITER commissioning and LHD physics data1. While the production data transfer systems have not been yet deployed, and data pre- and post-transmission sequences would still require more work, the tests have demonstrated that the inter-continental network performance would be sufficient for efficient remote participation in ITER experiments, even in most demanding scenarios.” – commented by Computing Coordinating Engineer, Denis Stepanov of the ITER Organization.

The AER network played a crucial role in enabling this redundancy and high throughput with 100 Gbps international links. The ring ensures that if one path experiences congestion or failure, traffic can be rerouted seamlessly via alternate routes such as Singapore or Amsterdam. For this test, the ability to run traffic over two simultaneous paths provided both near-line-rate performance and enhanced resilience.

“These results not only demonstrate the technical feasibility of moving data at nearly 100 Gbps over intercontinental distances but also highlight the importance of international collaboration. The combination of advanced transfer tools, high-capacity storage, and resilient global networking is laying the groundwork for ITER’s future scientific workflows.” – commented by A/Prof. Francis Lee, Chair of AER.

“These results provide confidence that flexible data processing scenarios could be implemented for ITER experiment data, including those which could require massive data transfers to the locations on the other side of the globe.” – commented by Mikyung Park, ITER’s Data, Connectivity and Software Project Leader.

The ITER Scientific Data Center team plans to carry out further tests in the coming years to refine tools, protocols and infrastructure, ensuring the system will be ready to handle the immense data flows expected when the project goes live in 2034.

Acknowledgments

Special thanks to Denis Stepanov of the ITER Organization and Kenjiro Yamanaka of the National Institute of Informatics (NII) for their key contributions and collaboration in preparing this article.

Article was co-edited by Denis Stepanov (ITER), Kenjiro Yamanaka (NII) and Vee Len (SingAREN)

Reference

- LHD physics data were provided by the National Institute for Fusion Science (NIFS) with the license shown here (https://www-lhd.nifs.ac.jp/pub/RightsTerms.html)